Today we are standing on the shoulders of giants. Tomorrow, thanks to AI, we will be sitting on their heads.

Today we are standing on the shoulders of giants. Tomorrow, thanks to AI, we will be sitting on their heads.

A lot of human intelligence is required to implement artificial intelligence.

“Machine intelligence is the last invention that humanity will ever need to make” – Nick Bostrom

Deep learning has greatly changed the landscape of machine learning and artificial intelligence in the last ten years. In 2018, professors Yoshua Bengio, Geoffrey Hinton, and Yann LeCun, pioneers of deep learning, have received the prestigious ACM A.M. Turing Award for “conceptual and engineering breakthroughs that have made deep neural networks a critical component of computing”. This chapter reviews the fundamentals of deep learning. Other chapters will cover its applications in computer vision and natural language processing. Deep learning is covered in great detail in (Goodfellow et al., 2016) and the documentation of TensorFlow and Pytorch.

The work on artificial neurons started in the 1930s and 1940s. In 1943, McCulloch and Pitts proposed that “neural events and the relations among them can be treated by means of propositional logic. It is found that the behavior of every net can be described in these terms.” In 1958, Frank Rosenblatt, a researcher at the Office of Naval Research invented the Perceptron to perform image recognition using photocells and a one-layer neural network. It could however perform only some rudimentary image classification tasks.

Figure 1. Description of Mark I Perceptron.

Source: https://apps.dtic.mil/dtic/tr/fulltext/u2/236965.pdf

Deep learning is a branch of machine learning that uses layers of activation functions, described as neurons, linking inputs to outputs. The inputs form an input layer which could be in the form of a numerical value, a vector, a matrix, or a multidimensional array (a tensor). The input can represent a picture, a video frame, some text, a soundwave, or any data collected by a sensor. Each function acts as a neuron with inputs and outputs. The function can be linear or nonlinear. When it is nonlinear it works as an activation function, being very small when the inputs are sufficiently small and increase in value when the combined inputs are sufficiently large. The outputs in turn form an output layer. Between the input and the output layers, there can be several hidden layers (Figure 2).

Figure 2. A neural network with an input layer, a hidden layer, and an output layer.

The inspiration of the artificial neuron is the human neuron (figure 3). A human neuron has a cell body called soma, receives nerve signals from the dendrites and sends an output signal through the axon to other neurons or other cell bodies such as muscle cells. The axon connects to the dendrite of another neuron and forms a synapse. The signal can be electric with moving ions or chemical with neurotransmitters. Each neuron has contacts with 1,000 other neurons. It is estimated that there are around 86 billion neurons in the brain. In addition to neurons, there are glial cells that outnumber the neurons by a factor of ten. Glial cells play important roles to support the neurons.

Figure 3. Neuron

By BruceBlaus – Own work, CC BY 3.0, https://commons.wikimedia.org/w/index.php?curid=28761830

The human brain appears to be vastly more complicated than a neural network. This should not be a concern as there are many examples of artificial technologies playing the same role as natural technologies: the wings and reactor of a plane replacing the wings and the muscles of a bird, or the combustion engine replacing carriage horses.

To be fair, the artificial neural network does not run in a vacuum, if we include the software, hardware, and power (the brain cells have to generate their own power) required to run the neural network the complexity can be as large. For instance, as of 2020, the Wafer Scale Engine 2 by Cerebras, a deep-learning integrated circuit chip, has 2.6 trillion transistors. A Graphical Processing Unit (GPU) can have more than 20 billion transistors and it is not uncommon to run hundreds of GPUs in parallel to train some deep learning neural networks.

Figure 4 shows a feedforward neural network. It is feedforward because the information flows from the inputs to the outputs in only one direction, forward. Some other neural networks such as recurrent neural networks allow loops, with information moving backward.

The network can be described in terms of input layers, hidden layers with numbers of inputs and outputs and with an activation function applied to the inputs, and output layers. The layer will contain a state with learnable parameters such as weights and biases and will perform some computation such as multiplying the inputs by the weights and adding the biases.

Figure 4. Neural network defined as a sequence of layers

The hidden layers can be of different types:

The input layer is a tensor object with an indication of the input shape e.g. (n,) and batch size m. Each observation is a n-dimensional vector and the model takes m observations at a time.

The dense layer uses the inputs from the previous layer, multiplies them by some weights, adds some bias terms, and transforms them through an activation function. The activation function is typically a Relu (rectified linear unit) which implements the maximum between the output value and zero (max(output,0)). Another popular activation for classification problems is the softmax activation. In the softmax, outputs are converted to probabilities between 0 and 1 by taking the exponential of their values and normalizing them so that they add up to 1.

Figure 5. Relu activation

Figure 6. Softmax activation

The activation layer transforms input values with some functions similar to the ones used in the dense layer or more complex functions.

The embedding layer transforms the input values into vector representations. This is commonly used in Natural Language Processing (word embedding) where indexed words are converted to vector representations such as Word2Vec (Mikolov et al., 2013)). Words of the same meaning will tend to be close in the vector space and relationships between words will tend to be similar in that space. Closeness is measured by some distance.

A masking layer discards certain input values for instance because they are missing. Missing values could be coded as 0 and the mask value will be 0.

A lambda layer allows arbitrary calculations on previous layers. It works as an activation layer but is more general as it can for instance make calculations with multiple input layers.

A subclass layer will modify an existing class layer and add new states and computation methods. For instance, input layers can be combined and go through a new computation to produce new outputs.

The different transformation from the input layers through each successive layer up to the output layers forms the feedforward propagation of the neural network. If all the network parameters are known the propagation will give some model outputs. If the model needs to fit some output data such as in supervised learning, the parameters will need to be learned with backward propagation.

Model training will adjust the model parameters such as the weights and biases to minimize some loss function.

The sum of squared errors is often used for regression problems. It is calculated as the sum of the squared differences between predicted values and true values. If we take the mean, it becomes the mean squared error.

Other losses that can be used for regressions are the mean absolute error, the mean absolute percentage error, the mean squared logarithmic error, and the cosine similarity among others.

The cross-entropy loss is used for classification problems. It is calculated as the negative of the sum of the products between the true class probability values (so 0 or 1) and the logarithm of the predicted probability values.

The KL divergence loss can also be used for classification problems. It is calculated as the sum of the products between the true class probability values (so 0 or 1) and the logarithm of the ratio of true class probability values to the predicted probability values.

The model parameters will be initialized when the layers are created. Usually, zero initialization is not a good idea because of the need to break the symmetry between neurons. With zero initialization the neural network conveys no information as all the inputs give the same outputs. Also in the hidden layers, the weights are not very differentiated and are unlikely to have unique final values.

With normal initialization, the weights are taken from a random normal distribution of a given mean (usually 0) and standard deviation.

With Glorot/Xavier (Glorot and Bengio, 2010)) normal initialization, the weights are taken from a random normal distribution of a given mean (usually 0) and standard deviation that depends inversely on the square root of the sum of the number of inputs and the number of outputs).

With Glorot/Xavier uniform initialization, the weights are taken from a random uniform distribution of a given mean (usually 0) and boundaries that depend inversely on the square root of the sum of the number of inputs and the number of outputs).

He initialization (He et al., 2015)) is similar to the Glorot/Xavier normal initialization but with a factor of 2 in the variance.

Deep neural networks have come back in vogue thanks to the rediscovery of backpropagation and the application of stochastic gradient-descent (Bottou, 2011)). The objective during the training of a neural network is to minimize a loss function by adjusting the weights and biases of the neural network.

In the univariate case (Figure 7), the first-order derivative indicates the direction towards which the free weight parameter x has to be adjusted. If it is positive then x has to be lower. If it is negative that x has to be higher. If the loss function is convex, this procedure is very reliable to find the global minimum. If it is not convex, the procedure might only find a local minimum.

Figure 7. Model loss as a function of weight (univariate case)

Optimizing a neural network adds two major complications to the unit variate case. The derivative becomes a gradient when there is more than one variable. There are many weights to optimize. Some very large language models such as GPT3 (Brown et al., 2020)) have billions of parameters. Then, there are many layers and each layer is a compounding function that makes use of the chain rule to calculate the gradient.

The chain rule is a simple method to calculate the derivative of a compounded function. For instance if h(x)=f(g(x)) then h’(x)=f’(g(x))g’(x). The derivative of h is the product of two derivatives. If there are n layers, the derivative would be the product of n derivatives.

With one weight variable, a new value would be calculated from the current weight, the derivative at this point, and a positive learning rate parameter lr: x’=x-lr * f’(x).

If the weights are vectors, we use the gradient instead of the derivative and the formula becomes: x’=x-lr * Dxf(x).

This procedure is iterative. Each application of the formula is an update. It is common to make an update after making the calculation for a group of observations (a mini-batch) taken from the training sample. The update is done by using the average gradients across the mini-batch observations: this is the stochastic gradient descent. Once all the mini-batches from the training sample are used, we have completed an epoch. We repeat the procedure and monitor the error on the training and validation sets after each epoch.

Learning rate

The learning rate is usually not constant. It will decrease in value as the learning progresses. Several methods are available such as momentum, AdaGrad, RMSProp, or Adam. The idea is to adjust the gradient faster by influencing its velocity with its past values (first moment) or past squared values (second moment). The higher the past value, the higher is the adjustment on the parameters but the higher the past squared value, the lower is the adjustment on the parameters. RMSProp and Adam are somehow normalizing the gradient so that the direction counts more than the value of the gradient itself.

Because of the product of gradients, the final gradient can end up being very small (vanishing gradient) or very large (exploding gradient). Vanishing gradient problems can be addressed by alternative weight initialization methods and activation functions such as ReLU. Exploding gradient problems can be addressed by gradient clipping which simply imposes a maximum and minimum value to the gradient.

Like in all supervised learning problems, there is always a risk of overfitting the model and losing in generalization. The model will perform well in-sample on the training data but will perform poorly out-of-sample on the validation data. Figure 8 shows the loss curves as a function of the number of epochs. The training loss and the validation loss both decrease till it reaches a point where the validation loss starts to increase. The model starts to overfit on the training data and underfit on the validation data. Early stopping will prevent some of the overfitting.

Figure 8. Model training and validation losses as a function of the number of epochs

Another method to limit overfitting is to use L1 and L2 regularizations. They consist of limiting the size of the weights by adding a regularization term to the loss. Instead of minimizing f(x) it is minimizing f(x)+ alpha * ||x||1 or f(x)+ alpha * ||x||2, where ||.||1 is the L1 norm (sum of absolute value of vector components) and ||.||2 is the L2 norm (square root of the sum of squared component values). By limiting the size of the weights, there is less risk of overfitting to training data because the weights cannot take extreme values.

Dropout (Srivastava et al., 2014)) is a powerful technique of regularization. Dropout drops inputs randomly (put the weights at 0) at a fixed rate during training. The remaining weights are scaled up to preserve the sum of weights. With dropout, the model does not rely on particular weights and is more robust to overfitting, and will generalize better.

Batch normalization (Ioffe and Szegedy, 2015)) is a technique to stabilize the training of a deep neural network. Each mini-batch is renormalized to a mean of 0 and a standard deviation of 1 before entering an activation function. This makes the learning easier as the weight updates have a similar scale and do not become too large or too small.

When the model is trained, additional metrics can be useful in addition to the model loss. Other measures of model fit can be used for probabilistic models such as cross-entropy and regression models such as cosine similarity. For instance, in a classification model, accuracy is a useful statistic, as well as AUC (area under the curve), true positives and negative, false positives and negatives, precision and recall, sensitivity and specificity.

While the model is training, it is also run on validation data. The same metrics and loss statistics are calculated for both training and validation data. Before being deployed in production, the model can be run on test data.

The model is then used for inference and prediction on new data online or in batch mode.

During the training, validation, and inference phase, model and performance data and statistics should be collected. In TensorFlow, TensorBoard (Figure 9) can be used to visually present and monitor such data. The model weights, summary plots, training graphs can easily be reported on such a dashboard.

Figure 9. TensorBoard

Bottou, L., 2011. Large-Scale Machine Learning with Stochastic Gradient Descent, in: Statistical Learning and Data Science. Chapman and Hall/CRC, pp. 33–42. https://doi.org/10.1201/b11429-6

Brown, T.B., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., Agarwal, S., Herbert-Voss, A., Krueger, G., Henighan, T., Child, R., Ramesh, A., Ziegler, D.M., Wu, J., Winter, C., Hesse, C., Chen, M., Sigler, E., Litwin, M., Gray, S., Chess, B., Clark, J., Berner, C., McCandlish, S., Radford, A., Sutskever, I., Amodei, D., 2020. Language Models are Few-Shot Learners. ArXiv200514165 Cs.

Glorot, X., Bengio, Y., 2010. Understanding the difficulty of training deep feedforward neural networks 8.

Goodfellow, I., Bengio, Y., Courville, A., 2016. Deep Learning, Illustrated edition. ed. The MIT Press, Cambridge, Massachusetts.

He, K., Zhang, X., Ren, S., Sun, J., 2015a. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. ArXiv150201852 Cs.

Ioffe, S., Szegedy, C., 2015. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. ArXiv150203167 Cs.

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., Salakhutdinov, R., 2014. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 15, 1929–1958.

“AI will be enormous. It will be enormous for idea generation. It will take care of errors. … I could go on and on and on about the complexities it raises, the opportunities it raises.”

Jamie Dimon, CEO JP Morgan Chase

JP Morgan (JPM) is the largest US bank in terms of assets and market capitalization. JP Morgan spends annually 11bn dollars in technology and AI is becoming a larger share of its budget. 40% is spent on new initiatives (JPM net income was 36bn dollars in 2019).

Thanks to its size and scale it is able to gather a large amount of data (400 petabytes of data) on its customers, operations, transactions, and markets. Its challenge is to serve its customers better with personalized services at scale with efficiency, reliability, security, and confidentiality.

It has brought expertise from technology companies such as Google and academia (Carnegie Mellon) to build its in-house AI expertise. It focuses on academic research and applied research and initiatives.

JPM lists six areas where it plans to use AI:

Anomaly detection and in particular fraud detection is a very active area for AI deployment in financial institutions. It can be used for anti-money laundering, credit card fraud prevention and detection, trade manipulation detection, and cyber-security.

Pricing is already using standard analytics (matrix pricing, derivatives pricing) in banks but can be refined with richer machine learning models (deep learning models, reinforcement learning models).

News, customer intelligence, smart documents, and virtual assistants involve a lot of Natural Language Processing (NLP). Automatic text recognition, classification, understanding, and generation are known NLP techniques that can be deployed in this context. The oldest application is probably check-deposit in ATM machines which is now also done with a mobile phone.

JPM is using virtual assistants to guide its corporate clients (CFO, treasurers) in its treasury services division portal and helps them access information, provide recommendations and transact. It is also using AI to facilitate trading and share relevant research with its clients.

JPM has also a research center led by a CMU professor, Manuela Veloso, PhD, which focuses on:

These areas of research are probably a bit more academic though ethics, fairness and explainability, privacy, and cryptography are very important for companies using AI.

JPM is also working closely with some AI startups, either mentoring them or investing in them.

The challenge is the sheer scale of JPM operations and the infrastructure it requires to sustain its AI efforts and operations. It has recently deployed Omni AI to provide data for its AI researchers and engineers. As it relies more on the public cloud, security and confidentiality are also very important. A lot of activities are also probably not easy to replace with AI such as investment banking advisory though AI could help provide insights from new sources of data and make bankers more efficient and less focused on just collecting data.

Ping An is the largest insurance company in China. It is a publicly-listed conglomerate providing services in Life and Health Insurance, Property and Casualty, Banking, Asset Management, Fintech, and Healthtech to over 200 million customers and over 500 million online customers. Its subsidiary Ping An Bank has recently been named World’s Best Digital Bank at the Euromoney Global Awards for Excellence 2020.

In banking, Ping An with its subsidiary Ping An Bank is a relative latecomer compared to the incumbents. There are four historical banks: Industrial & Commercial Bank of China (ICBC), China Construction Bank Corp. (CCB), Agricultural Bank of China (ABC), and Bank of China, and then newcomers such as China Merchants Bank (CMB) and China CITIC Bank.

To differentiate itself and because of the scale of its operations, it has invested heavily in technology, in AI (along with blockchain and cloud computing) to service its customers. Ping An follows a finance + technology strategy, investing 1% of its revenues in R&D every year to enhance its technology, improve efficiency, lower its cost, and better manage risk. Technology feeds into five ecosystems: financial services, health care, auto services, real estate services, and smart city services.

In financial services, Ping An bank is using AI extensively in its “AI Banker” system. The “AI Banker” is used to:

Technologies such as face recognition and document recognition are also used for customer identification to provide credit or make payments. Product recommendation systems are also used to match customers with

Ping An offers some of these capabilities to other banks and insurance companies through its OneConnect SaaS (Software as a Service) platform.

In healthcare, Ping An has developed Ping An Good Doctor, a platform to connect doctors and their patients. Ping An Good Doctor, has more than 300 million registered customers and 67 million monthly users. It provides information on 3,000 diseases and suggests treatments based on medical records and data. The doctor has also access to the electronic profile of the patient. The system is designed to prevent misdiagnosis and missed diagnosis.

Ping An has recently deployed one-minute clinics that allow patients to interact with an AI doctor for diagnosis and receive treatment. The AI doctor interacts with the patient in the clinic booth, finds a diagnosis, then a real doctor confirms the diagnosis and provides supplementary information. The common drugs are stored cryogenically in the booth and can be delivered on-site. Drugs can also be ordered through the Good Doctor App.

Ping An insurance arm uses a Credit-Based Smart Auto Insurance Claim Solution to process auto claims. Several AI technologies are used in this process. After an automobile accident, a customer can file a claim on a mobile phone, take pictures of the damages and submit any relevant documents. The customer is identified by face recognition. The AI system can assess the losses by identifying the auto parts and accessing a database of replacement costs. The customer then receives compensation based on the loss assessment but also her driving behavior and history. The whole process can take just a few minutes.

Ping An is anticipating the emergence of self-driving cars where the risk is shifting from the drivers to the automakers and is already thinking on how to cover this new risk. With AI and more data, it is moving to a predictive model of damage loss estimate instead of a simple ex-post model of loss estimate.

In other areas, Ping An is leveraging satellite imaging, drones, and Internet of Things (IoT) to assess business risks such as climate change. These data can be fed into AI models which can predict risk and losses more accurately.

The FinTech and HealthTech initiatives are still a small part of current Ping An’s profits. They require very large investments that might test the patience of investors. These are also very competitive areas where AI innovations are key but present risk if they don’t have a long track record. Ping An is also offering many of its AI models on its platforms like OneConnect. Ping An will need to implement a smart AI risk management system to address these new risks internally and externally.

“AI is being used in almost every corner of Ant’s business,”

Yuan (Alan) Qi, a vice president and chief data scientist at Ant

Ant Group is the fintech affiliate of Alibaba. It was founded as Ant Financial in 2011 to operate Alipay, the digital payment system of Alibaba set up in 2004 to establish escrow payments for customer transactions. Alipay has expanded to be much more than a payment platform and is now used for commercial transactions, financial transactions, daily life transactions, and to access over two million third-party apps (see Figure 1).

Alipay has over 1 billion annual active users, over 700 million monthly active users, and more than 80 million active merchants. Ant Group not only works with Alibaba, which remains its main customer, but also with many other partners such as banks, asset managers, and insurers. Ant Group works with more than 2,000 partner financial institutions to give them access to customers and help them offer financial services.

Figure 1. Alipay on mobile phone

Ant Group has its own products: asset management (Yu’e Bao for money market funds), consumer credit (Huabei), health care (Xiang Hu Bao), private banking (MY Bank), and credit scoring (Zhima Credit). Some of its products can be combined with its partner products to enhance customer insights and risk management.

Figure 2. Ant Group offers Credit, Investment, and Insurance services

Ant Group’s strategy is to increase the trust and engagement of its customers in the Alipay platform by offering all kinds of services (digital finance, food, entertainment, transportation, travel, healthcare, public utilities..) and gain very accurate insights about them. These insights allow Ant Group to offer more innovative and customized products and services either directly or indirectly through its partners.

Ant Group serves over one billion customers, 80 million merchants, and processes over 15 trillion dollars of transactions (Total Payment Value) every year. It has to be very accurate to maintain trust and customer satisfaction and keep on offering appropriate tailored products while managing all the risks related to KYC, fraud, AML, credit, liquidity, operations, security, and data privacy. In particular, its expertise in fraud detection is critical for the success of its platform. AI is used extensively at Ant Group to support not only its scale and scope of business activities but also its numerous partner operations.

Ant Group specializes in technology applied to the world of consumer and small and micro-business finance and is an online leader in CreditTech, InvestmentTech, and InsureTech. AI techniques such as machine learning, natural language processing, man-machine interaction, secure collaborative intelligence, and time-series graph intelligence support all these activities.

Ant Group has developed AlphaRisk, an artificial intelligence smart risk control engine to detect and prevent fraud. It offers real-time risk-based decisions to counter fraud attempts, real-time transaction verifications, and customer authentication that can be used by third parties. It uses state of the art AI algorithms to power AlphaRisk. Its prediction models allow companies to manage their risks better, secure their platforms, and guarantee legitimate customer transactions against frauds. Its models are self-learning and refit automatically.

Credit is a growth area for Ant Group. The level of consumer credit in China is still very low compared to the US and other developed countries. Working with 100 partner banks, Ant Group offers consumer and small and micro business loans. Models are used to assess and reevaluate credit limits, the likelihood of a borrower’s ability and willingness to repay a loan, and the pricing on the loan.

Ant Group is developing joint credit risk models with some partner banks. Like in federated learning, the models use data from both Ant Group (consumption, wealth, risk profile) and the bank (tax and income) without ever leaving each institution, maintaining the privacy of data.

AI is used to match customers to investment products according to their risk profiles and behavior. Ant Group lets asset managers leverage its customer database, technology, and AI models to offer more innovative investment products on its platform.

Intelligent investment advisory is also used for asset allocation and investment recommendation. In partnership with Vanguard, it offers AI-based fund investment advisory services on its wealth management platform. It suggests a fund allocation based on the customer’s financial objectives, risk tolerance, and time horizon. The minimum investment is only 113 dollars.

The insurance market is relatively underdeveloped in China. With a wealthier and aging population, there are growth opportunities in life, health and P&C insurance products. Ant Group offers shipping return insurance for merchandise purchased on the Taobao platform, health insurance, pension annuity insurance, and also works with third-party insurers to sell their products and collect insurance premiums, and contributions.

AI models can be deployed to assess the risk and pricing of insurance products based on the high-quality data collected on each customer. AI is also used to assist insurance claims, in particular, Image Recognition and Natural Language Processing to analyze submitted documentation and photos.

Due to the scale and scope of Ant Group’s operations, there are multiple challenges. We will focus on the ones related to AI.

First, Ant Group depends on the trust of the accuracy of its AI models. It relies on these models for prediction, decision making, risk management, matching of customers to products, pricing and valuation, fraud detection, and prevention, etc. Any failure of one of its models can be very costly. All the stakeholders in Ant Group’s AI models need to trust them. Markets, products, customers, small businesses evolve all the time and become more sophisticated requiring the AI models to be continuously improved and updated.

Second, Ant Group works with a lot of user data. These data are at risk of being misused intentionally or unintentionally and this can hurt users’ trust in Ant Group operations. Many countries including China have new privacy laws that become more stringent. Bad actors can also attempt to steal or misuse data. They can disguise themselves as partners or users.

Third, Ant Group relies on a network of partners and affiliates that it does not control directly. Any model failures or data issues can negatively impact Ant Group’s AI operations. For instance, if some incorrect customer’s income data is used by a financial partner, the final credit decision could be erroneous.

Fourth, Ant Group operates in financial services that are heavily regulated. Commercial and retail banking, asset management, insurance are all regulated. Online financial services are also starting to be closely regulated. Failure to comply can be very costly to Ant Group and compliance can be expensive.

Fifth, Ant Group is a technology company that needs to constantly innovate while maintaining operations at a huge scale domestically and internationally. With so many users, customers, merchants, small businesses, and financial partners, products, and daily transactions, its operations can be extremely complex to manage and change.

AWS is the cloud services subsidiary of Amazon. It provides many tools and services to develop AI and Machine Learning models on its platform, from data ingestion, data exploration, data transformation, to model training, tuning, optimization, and deployment.

Amazon Athena is a serverless fast, efficient, highly available, durable, and secured database query engine for big data. It is based on Presto, an open-source query engine originally created by Facebook to query its own databases with low latency. It gets data from Amazon S3 in different formats such as CSV, JSON, ORC, Avro, or Parquet using standard SQL queries. It can also execute join queries from JDBC-compliant databases such as MySQL, and other databases such as Amazon Redshift.

Amazon Redshift is a cloud-based data warehouse and a relational database management system. It replaces on-site data warehouses and database systems. It is based on the open-source PostgreSQL project but works very differently as it is focused on very large databases.

It works with clusters of nodes and slices of nodes to process the SQL queries and retrieve the structured data stored in the nodes. A cluster can have a leader node distributing tasks to the worker nodes and make them work in parallel. Amazon Redshift is highly scalable with added nodes when required and can run very fast queries on petabytes of data. It can be linked to ETL processes and feed analytical workloads (dashboard, visualization, and business intelligence tools) at the enterprise level.

Amazon Kinesis is a data streaming platform to ingest, process, analyze, and store real-time, high-throughput streaming data. It is based on the open-source project Apache Kafka, initially developed by LinkedIn for its own needs. Streaming data can be video data, transaction data, time-series data, and any data that are produced continuously. Contrary to batch analytics, streaming analytics allow an almost immediate reaction to new events and constantly refreshed data outputs and instances for end-users and customers. It is for instance ideal for price data, fraud detection, and system monitoring data.

Amazon Kinesis offers four capabilities: Kinesis Video Streams for video data captured by cameras, Kinesis Data Streams to capture, process, and store streaming data from multiple sources, Kinesis FireHose for continuous ETL jobs and data transfer to AWS databases, and Kinesis Data Analytics for transforming and analyzing streaming data.

Amazon SageMaker Notebooks are Jupyter style notebooks that can import, process, and analyze the data from AWS data stores. Usually, only small data samples can be analyzed in a SageMaker Python notebook. If necessary Spark jobs using SparkMagic notebooks and an EMR Spark cluster can be run to process the data or even Redshift or Athena are used directly to explore the data.

Since Amazon Athena is a database query engine, it can be used for data exploration like in a normal relational database.

Amazon QuickSight is a business intelligence tool to create interactive dashboards that can be embedded into websites, analytics reports, emails to share ML insights with the entire organization. It connects seamlessly with all AWS storage and database solutions. It is serverless and therefore scalable, as the number of users grows, it can grow along with them. It allows quick iteration when developing new ML models as results can quickly be shared with all stakeholders.

AWS Glue is a serverless extract, transform, and load (ETL) tool to prepare data and identify useful metadata and data transformation from an AWS data lake or data source (Amazon S3, Redshift,..). The metadata and table definitions are stored in an AWS Glue Metadata Catalog. It can load the final data into a data store such as Amazon Redshift. It is built with Apache Spark and generates ETL and visualization and automatic ETL modifiable code in Scala or Python.

Amazon SageMaker provides Notebooks that a user can use to write Python scripts and access the standard data science and machine learning libraries (Pandas, Matplotlib, Seaborn, Sklearn, TensorFlow..). Athena and Redshift can also be accessed through these notebooks thanks to the Athena client library (PyAthena) and SQL libraries (SQLAlchemy). Complex queries can be sent directly from the notebooks.

Amazon SageMaker Processing is used when the whole production data needs to be processed and transformed into useful features at scale. The type and the number of instances need to be defined to perform the processing step.

Amazon EMR is a scalable data processing engine built on Hadoop or Apache Spark. Apache Spark is a very popular distributed processing and analytics engine for big data. Workloads are automatically deployed to clusters and nodes. A SageMaker Notebook can run Spark commands and process data on a Spark cluster. The data can be analyzed and tested with the Amazon DeeQu API. Data can be tested for missing or Null values, range, correct formatting, completeness, uniqueness, consistency, size, correlation, etc..

Amazon SageMaker Notebooks can use standard machine learning libraries such as Scikit-Learn, TensorFlow, MXNet, or PyTorch to transform the data, do feature engineering, split the data, and train the models on samples. The libraries are accessed by loading containers with pre-defined environments, through scripts, or customized containers.

Some objective metrics such as accuracy have to be defined to evaluate the model performance. Model hyperparameters and parameters can be saved to be examined for model review and evaluation.

Amazon SageMaker Training Jobs Debugger uses rules to check for issues such as overfitting, data imbalance, or vanishing gradients. If the rules are triggered, the training stops to allow debugging of the model and inspection of intermediary steps and objects.

Amazon SageMaker Hyper-Parameter Optimizer can find the best hyperparameters within some ranges to optimize some objective metrics using different methods such as grid search, random search, or Bayesian optimization.

Amazon SageMaker AutoPilot is the AutoML tool of SageMaker. It analyzes the raw data and the target to be predicted. It chooses the best algorithm candidates, processes the data to create the best features, and automatically trains and tunes the models. The best hyperparameters are automatically selected for each algorithm.

Amazon SageMaker Experiments track the multiple model runs and provide auditability, traceability, and reproducibility of these runs. Data, parameters, hyperparameters, models can be accessed historically to review and reproduce feature engineering, training, tuning, and deployment results. Each experiment includes trials, and each trial includes steps, each step includes tracking information. Versioning and lineage are kept across all the trials.

Amazon SageMaker Model Endpoints allow the user to interface with a model to get inference results on production data. It requires the location of the data and model artifacts (e.g. an S3 bucket), the container of the model, and some parameters and compute resource configurations to get inferences from the model. Different variants of the model can be requested to run in parallel. Endpoints are accessed through REST APIs.

Amazon SageMaker Model Monitoring is used for monitoring the model and identifies any deviations from a baseline. A baseline is created from the training data using a tool such as Amazon DeeQu in Apache Spark. Model Monitoring captures the data and model inference results and checks that all the constraints are verified, if not, Amazon CloudWatch gets triggered and sends warnings about the deviation. Amazon CloudTrails will save all the model logs to perform model reviews and debugging.

A/B Tests are used to improve production models and test models and hypotheses on production data. Amazon SageMaker A/B Tests can be performed using Endpoints. Different training data, model versions, compute resource configurations can be tested with Amazon SageMaker Model Endpoints. After reviewing the different model results, an improved model can be selected and replace the current one.

With Amazon SageMaker Canary Rollouts, a new model with a different production variant than the current model can be deployed through Endpoints to a limited number of customers and progressively be expanded to more customers if the model performance is satisfactory.

Amazon SageMaker Batch inference is an alternative to Endpoints if real-time results are not necessary. Amazon SageMaker reads the batch data from an S3 bucket location, runs inference from a model, and delivers the results to another S3 bucket location.

Figure 1. AWS Step Functions. Source: Amazon

AWS Step Functions is an orchestration tool to coordinate the tasks of a machine learning workflow such as processing the data and running AWS Lambda functions or pre-trained models. It can be used for extract, transform, and load (ETL) processes, for breaking down complex machine learning codebase and makes it more modular, for coordinating batch processing jobs, for triggering events and notifications. AWS Step Functions is presented through a visual workflow graph.

Figure 2. AWS Event Bridge. Source: Amazon

Amazon EventBridge connects events (changes of states) to workflows. The events can come from SaaS applications (Datadog, OneLogin, PagerDuty, Savyint, Segment, SignalFX, SugarCRM, Symantec, Whispir, and Zendesk), customized applications, or AWS Services. They trigger workflows that can include connecting to applications, microservices or databases, AWS Lambda functions, and other AWS applications, or communicating results.

Tencent Holdings (“Tencent”) is a technology conglomerate firm based in China. It offers products and services in consumer internet, online gaming, social networks, media and entertainment, fintech, and cloud. Its most well-known products are QQ, an instant messaging app for teenagers, and WeChat (Weixin in mainland China), a mobile messaging app that offers also other services such as digital payment, peer-to-peer payment, shopping, and games.

WeChat Pay is the digital payment service of WeChat. WeChat also includes mini-programs which are apps within WeChat developed for third-party businesses. WeChat Pay competes directly with AliPay of Ant Financial. WeChat Pay can be used in-store at point-of-sales with a WeChat Pay barcode or merchant QR code, on websites, on mobile apps, on WeChat official merchant accounts, or mini-programs hosted in WeChat. A WeChat Pay account is most commonly linked to payment cards and today can be linked to an international credit card such as Visa, Mastercard or American Express.

Like AliPay, WeChat Pay offers wealth management services such as savings and investment products through its platform LiCaitong and is partnering with banks, mutual fund, and wealth management bank subsidiaries or companies including Blackrock.

Figure 2. WeChat Pay on mobile phone

WeChat has more than 1.1 billion users and WeChat Pay has more than 900 million users. Tencent’s business is all digital and consumer-oriented. Given its size, it needs to leverage AI to support its products and services at scale. IT and cloud infrastructure management, customer support and enhanced customer engagement, payment fraud prevention and detection, digital content management and monitoring, product innovation, all require advanced AI to grow.

Tencent has three labs dedicated to AI: Tencent AI Lab, Youtu Lab, and WeChat AI. Tencent AI Lab is focused on fundamental research. Youtu Lab is developing applications in image processing, pattern recognition, and deep learning. WeChat AI is focused on Speech Recognition, Natural Language Processing, Computer Vision, Data Mining, and Machine Learning for WeChat.

Tencent also invests in many AI accelerators and AI startups. It has invested in over 800 companies and 70 have gone public. It has an office in Palo Alto, CA to invest in non-Chinese startups. It has invested inTesla, Spotify, and Snap.

Tencent is also involved in agriculture, healthcare, industry, and manufacturing applications of AI.

Social AI aims at developing better interactions between humans and machines. For instance, the lab has developed a smart chat application using natural language processing and understanding. The chat can be customized and used by businesses on the WeChat App or other platforms to interact with their customers.

Game AI facilitates the interaction between the real world and the virtual world of games and continuously enhances the players’ game experience. It supports the numerous online games offered by Tencent and its partners (Riot Games’ League of Legends, Epic Games’ Fortnite,BlueHole’s PlayerUnknown’s Battlegrounds). It has recently developed an AI player, named Wukong AI which learned how to play games such as Honor of Kings through reinforcement learning, the same way AlphaGo of DeepMind learned to play Go (Tencent has its own AI go player named Fine Art). Humans can play against Wukong AI and average players have difficulty beating it at higher levels.

Content AI focuses on search, personalized recommendation, and content generation for its users. It improves the contents and recommendation of online video subscription services (drama series, anime series, variety shows, and short videos in the Weishi app), music (paid streaming music), reading subscription platforms (Weixin Reading app), and news (WeChat Moments newsfeed).

Platform AI provides tools to develop AI applications using OCR, machine translation, conversation bot, speech recognition, natural language processing, sentiment analysis, computer vision, human body and face recognition, image and video processing and enhancement.

Intelligent Titanium Machine Learning is a one-stop cloud-based machine learning for machine learning engineers and data scientists to perform model training, evaluation, and prediction. Tencent Yunzhi Tianshu Artificial Intelligence Service Platform is an AI Platform service to deploy AI applications in enterprises. It connects edge devices, AI algorithms, and data through data connectors.

Youtu Lab specializes in computer vision and offers different applications in policing, person search and identification, vehicle traffic control and monitoring, face verification, graphical content monitoring, and censoring.

WeChat AI supports all the applications of AI on the WeChat platform. They include voice recognition, usage of image scanning QR code, machine translation, chatbots to entertain users, music/TV and voice lock security. It uses speech recognition and audio processing, natural language processing, image and video processing, data mining and text understanding, and distributed machine learning.

The most important challenges include government regulation, reputation, and competition risks.

Tencent is exposed to a lot of regulatory and compliance risks as the consumer internet and AI are becoming more scrutinized in most countries including China. Privacy, data protection, consumer protection laws apply to Tencent in its social networks and gaming activities. Another set of laws and regulations in the financial sector such as banking laws, investor protections, financial regulations and compliance, and risk regulations apply to Tencent’s activities.

The Chinese government seems to have some control over WeChat and could present some potential risk for Tencent’s international activities. The US government is for instance attempting to ban WeChat for American users because it might expose them to some security risk.

Internet and gaming activities can sometimes be perceived as damaging for humans if it leads to psychological problems such as addiction especially among young customers and Tencent has to be careful at evaluating the social impact of its businesses. Furthermore, some activities in AI such as policing or surveillance can be controversial in some countries and present some reputation risk for Tencent.

Business competition is another challenge as consumers can change their behaviors and adopt new platforms, new products and services offered by other firms. If Tencent does not keep up with innovation, it might lose users and market share. In fintech, Ant Financial with AliPay is for instance a significant competitor. Tencent is very dominant in gaming but consumer taste can change quickly and large investments are required to keep up with the latest technologies such as augmented reality (AR) and virtual reality (VR).

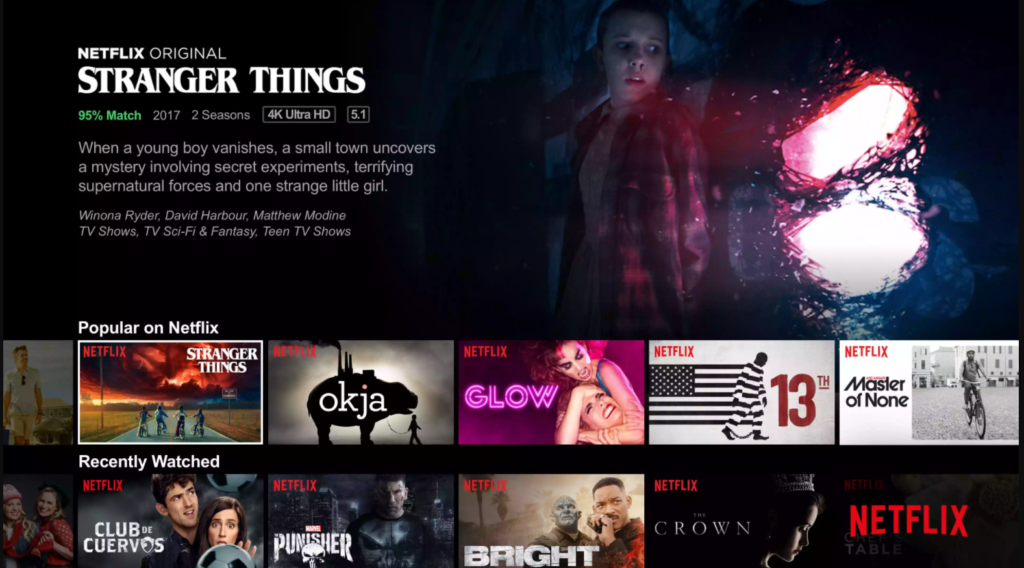

Netflix is the largest video streaming service company in the world, present in 190 countries and serving around 195 million customers. It has annual revenues of close to 20 billion dollars and a market capitalization of over 200 billion dollars. It started in 1997 with DVD rentals and sales by mail and started to video streaming in 2007. Netflix is available on many platforms including TVs, phones, and tablets. Netflix is also involved in the production of original content and in movie production with Netflix Studio.

Netflix is mostly a digital company with its infrastructure run in the cloud with AWS. It streams billions of hours of content every month in many countries and many languages, it collects a large amount of data from its users and thrives to provide them with real-time recommendations based on viewing and preferences. Its objective is to keep its users watching the most enjoyable shows on its platform. It needs AI to operate at this scale.

On its excellent blog, Netflix describes how AI and machine learning are used in different areas of its business.

Netflix needs to help its customers find contents to watch on its platform. A customer can watch a film she enjoys but then will be looking to find another one to watch with maybe the same theme (action, romantic comedy, science-fiction..), same director, or same cast.

Each user has a personalized page with recent views, trends, and recommendations by category, as well as original Netflix content. Everything on the page is customized to the viewer including the suggested categories, films or series, and their even visuals. The image representing the film can show a particular actor or graphic that will attract the attention of the viewer.

Netflix uses several machine learning algorithms to select the content to show on the user home age. In particular, it is using A/B testing and contextual bandits. It is running experiments in real-time of different page configurations and collects information on which configuration is getting the most clicks. It knows which film the user is ending up watching and knows if the user has watched it to the end. It is mixing predictions based on the user’s characteristics, preferences, and history with more randomized suggestions to uncover more information on the user’s preferences.

Netflix has to constantly purchase the rights or produce new content for its platform. For TV series, it will often agree to stream the full season without a pilot. It also needs to know how much to invest in new productions. It is using predictive modelling to forecast the demand for new shows. It looks for instance at similar shows, the similarity being measured by some distance between show attributes. Because it has detailed information on shows which have been popular and have found an audience it knows with some probability which new show will be successful.

Netflix is producing its own movies with Netflix Studio. It has optimized the movie creation life-cycle from pre-production, planning, scheduling, production, post-production to marketing using data science and machine learning. For instance, scheduling is treated as an optimization with constraints problem. Given the availability of the film crew, director, actors, location it can generate an optimal schedule in a very short time. It also chooses which film to produce and how much to invest in each film given the likelihood that it will attract sufficient viewers on its platform. Netflix has borrowed 20 billion dollars to finance its original productions.

Streaming is a technical challenge as Netflix is using over a third of the national internet bandwidth in the US. It has to monitor the quality of the streaming experience for each individual user who is at a different location, has a specific device, specific bandwidth, and specific internet provider. Even before a new content is streamed, Netflix is controlling its quality and tries to predict if some content will have quality issues.

Marketing messages are individualized so that they are more likely to convince non-subscribers to sign up. Netflix has to choose the marketing channel such as YouTube or Facebook or others and what content to show to a potential new member. It is using causal modelling to evaluate the effectiveness of its marketing spending.

A challenge for Netflix is to keep licensing and producing attractive content for its customer base. If tastes change, its models have to capture them and quickly recommend appropriate new content. Netflix competes for customer attention and have to compete with other activities such as VR video games or social networks. Netflix is not paying for the internet infrastructure per se but if it continues to be a significant user of the national bandwidth it might be asked to pay for it or to reduce the quality of its video streaming.

DBS Bank is the largest bank in Singapore and Southeast Asia with an international presence in China, Taiwan, Hong Kong, India, Indonesia. It operates in consumer banking, wealth management (10.8 million customers in 2019), and institutional and SME banking (240,000 customers) across 18 markets globally.

It started a digital transformation process in 2014 to modernize its business operations and become a fully digital bank. It has since then received many awards as the best digital bank and the best bank.

It is not clear when AI became prevalent at DBS Bank but it has embraced digital transformation, the cloud, data, and analytics very early on to become more competitive and disrupt itself before being disrupted by competition from other banks, fintech companies, and foreign tech conglomerates such as Alibaba. In its early years, DBS Bank had a reputation for poor customer service.

Among the Singaporean banks, it offers more mobile apps (Figure 2) and has adopted mobile and digital banking to acquire, retain, and engage its customer base.

Figure 2. DBS mobile apps

DBS Bank has numerous initiatives that leverage AI and analytics:

DBS Bank owns DBS PayLah!, a digital wallet used by its 1.6 million customers to make payments in stores, pay bills, order meals online, book shows, travels, taxis and make transfers to other users. It has many platform partners and uses its insights on its users for cross-marketing initiatives.

DBS Bank uses contextualized marketing to sell products to its customers. It calls it hyper-personalization and is very similar to recommendation systems (for products or ads) seen in other industries. This kind of personalized service used to be available to high-net-worth individuals in private banking but can now be offered to all its clients thanks to technology.

DBS Bank uses sentiment analysis to understand its clients better and address their needs and requests. This lowers the cost of customer support and increases customer satisfaction. Sentiment analysis leverages recent progress in Natural Language Processing by identifying positive and negative keywords and sentences in text and speech.

In India and Indonesia, DBS Bank uses data-driven algorithmic credit underwriting models to approve small ticket-size loans to individuals through their mobile phones. These markets are much larger than Singapore and DBS Bank has to rely on automation and algorithms to service such markets. Mobile phones are also key to success because large shares of the population are under-banked.

DBS Bank is developing automation and data-driven capabilities for credit risk assessment and monitoring of the credit-sensitive assets in its portfolios and help reduce their downgrade risk.

DBS Bank is also building a credit platform for its Institutional Banking Group to manage and modernize its credit workflow. It has rolled out the platform to several regions in Asia.

In Wealth Management, DBS Bank is using robo-advisors with human advice with its DBS digiPortfolio product. It is offering customized market research and insights on its DBSiWealth platform.

DBS Bank is using artificial intelligence models to manage financial crime risks through dashboards, advanced customer and counterparty network monitoring, and priority ranking of financial crime risk.

DBS Bank has used a Platform Operating Model strategy in 2018. These platforms let business and technology collaborate on common projects, share data, models and analytical tools, predictive analytics and workflow processes. DBS Bank has deployed over 33 such platforms across its business.

DBS Bank is using AI models in its call centers in Singapore and India to predict customer issues and route the calls more efficiently and address the issues automatically.

DBS Bank has adopted builts APIs in real estate, education, healthcare, insurance, transport, logistics, and e-commerce sectors to connect with its ecosystem partners and cross-sell its services to their shared customers using contextualized marketing.

As a financial institution, DBS Bank is exposed to credit and financial risk, financial crime risk, data governance and protection risk, cybersecurity risk, regulatory and reputational risk. As it expands its digital footprint, cybersecurity and data protection are becoming fundamental to the credibility of its digital operations.